By Dr. Barna Szabó

Engineering Software Research and Development, Inc.

St. Louis, Missouri USA

While reading David McCullough’s book “The Wright Brothers”, a fascinating story about the development of the first flying machine, this question occurred to me: Would the Wright brothers have succeeded if they had used substantially fewer physical experiments and relied on finite element modeling instead? I believe that the answer is: no. Consider what happened in the JSF program.

Lessons from the JSF Program

In 1992, eighty-nine years after the Wright brothers’ Flying Machine first flew at Kitty Hawk, the US government, decided to fund the design and manufacture of a fifth-generation fighter aircraft that combines air-to-air, strike, and ground attack capabilities. Persuaded that numerical simulation technology was sufficiently mature, the decision-makers permitted the manufacturer to concurrently build and test the aircraft, known as Joint Strike Fighter (JSF). The JSF, also known as the F-35, was first flown in 2006. By 2014, the program was 163 billion dollars over budget and seven years behind schedule.

Two senior officers illuminated the situation in these words:

Vice Admiral David Venlet, the Program Executive Officer, quoted in AOL Defense in 2011 [1]: “JSF’s build and test was a miscalculation…. Fatigue testing and analysis are turning up so many potential cracks and hot spots in the Joint Strike Fighter’s airframe that the production rate of the F-35 should be slowed further over the next few years… The cost burden sucks the wind out of your lungs“.

Gen. Norton Schwartz, Air Force Chief of Staff, quoted in Defense News, 2012 [2]: “There was a view that we had advanced to a stage of aircraft design where we could design an airplane that would be near perfect the first time it flew. I think we actually believed that. And I think we’ve demonstrated in a compelling way that that’s foolishness.”

These officers believed that the software tools were so advanced that testing would confirm the validity of design decisions based on them. This turned out to be wrong. However, their mistaken belief was not entirely unreasonable if we consider that by the start of the JSF program commercial finite element analysis (FEA) software products were 30+ years old, therefore they could have reasonably assumed that the reliability of these products greatly improved, as were the hardware systems and visualization tools capable of creating impressive color images, tacitly suggesting that the underlying methodology is capable of guaranteeing the quality and reliability of the output quantities. Indeed, there were very significant advancements in the science of finite element analysis which became a bona-fide branch of applied mathematics in that period. The problem was that commercial FEA software tools did not keep pace with those important scientific developments.

There are at least two reasons for this: First, the software architecture of the commercial finite element codes was based on the thinking of the 1960s and 70s when the theoretical foundations of FEA were not yet established. As a result, several limitations were incorporated. Those limitations kept code developers from incorporating later advancements, such as a posteriori error estimation, advanced discretization strategies, and stability criteria. Second, decision-makers who rely on computed information failed to specify the technical requirements that simulation software must meet, such as, for example, to report not just the quantities of interest but also their estimated relative errors. To fulfill this key requirement, legacy FE software would have had to be overhauled to such an extent that only their nameplates would have remained the same.

Technical Requirements for CbA

Certification by Analysis (CbA) uses validated computer simulations to demonstrate compliance with regulations, replacing some traditional physical tests. CbA allows for exploring a wide range of design scenarios, accelerates innovation, lowers expenses, and upholds rigorous safety standards. The key to CbA is reliability. This means that the data generated by numerical simulation should be as trustworthy as if they were generated by carefully conducted physical experiments. To achieve that goal, it is necessary to control two fundamentally different types of error; the model form error and the numerical approximation error, and use the models within their domains of calibration.

Model form errors occur because we invariably make simplifying assumptions when we formulate mathematical models. For example, formulations based on the theory of linear elasticity include the assumptions that the stress-strain relationship is a linear function, independent of the size of the strain and that the deformation is so small that the difference between the equilibrium equations written on the undeformed and deformed configurations can be neglected. As long as these assumptions are valid, the linear theory of elasticity provides reliable estimates of the response of elastic bodies to applied loads. The linear solution also provides information on the extent to which the assumptions were violated in a particular model. For example, if it is found that the strains exceed the proportional limit, it is advisable to check the effects of plastic deformation. This is done iteratively until a convergence criterion is satisfied. Similarly, the effects of large deformation can be estimated. Model form errors are controlled by viewing any mathematical model as one in a sequence of hierarchic models of increasing complexity and selecting the model that is consistent with the conditions of the simulation.

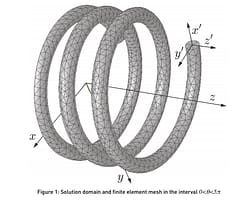

Numerical errors are the errors associated with approximating the exact solution of mathematical problems, such as the equations of elasticity, Navier-Stokes, and Maxwell, and the method used to extract the quantities of interest from the approximate solution. The goal of solution verification is to show that the numerical errors in the quantities of interest are within acceptable bounds.

The domain of calibration defines the intervals of physical parameters and input data on which the model was calibrated. This is a relatively new concept, introduced in 2021 [3], that is also addressed in a forthcoming paper [4]. A common mistake in simulation is to use models outside of their domains of calibration.

Organizational Aspects

To achieve the level of reliability in numerical simulation, necessary for the utilization of CbA, management will have to implement simulation governance [5] and apply the protocols of verification, validation, and uncertainty quantification.

Are We There Yet?

No, we are not there yet. Although we have made significant progress in controlling errors in model form and numerical approximation, one very large obstacle remains: Management has yet to recognize that they are responsible for simulation governance, which is a critical prerequisite for CbA.

References

[1] Whittle, R. JSF’s Build and Test was ‘Miscalculation,’ Adm. Venlet Says; Production Must Slow. [Online] https://breakingdefense.com/2011/12/jsf-build-and-test-was-miscalculation-production-must-slow-v/ [Accessed 21 February 2024].[2] M. Weisgerber, M. DoD Anticipates Better Price on Next F-35 Batch. Gannett Government Media Corporation, 8 March 2012. [Online]. https://tinyurl.com/282cbwhs [Accessed 22 February 2024].[3] Szabó, B. and Babuška, I. Methodology of model development in the applied sciences. Journal of Computational and Applied Mechanics, 16(2), pp.75-86, 2021 [open source].[4] Szabó, B. and Actis, R. The demarcation problem in the applied sciences. To appear in Computers & Mathematics with Applications in 2024. The manuscript is available on request.[5] Szabó, B. and Actis, R. Planning for Simulation Governance and Management: Ensuring Simulation is an Asset, not a Liability. Benchmark, July 2021.Related Blogs:

- Where Do You Get the Courage to Sign the Blueprint?

- A Memo from the 5th Century BC

- Obstacles to Progress

- Why Finite Element Modeling is Not Numerical Simulation?

- XAI Will Force Clear Thinking About the Nature of Mathematical Models

- The Story of the P-version in a Nutshell

- Why Worry About Singularities?

- Questions About Singularities

- A Low-Hanging Fruit: Smart Engineering Simulation Applications

- The Demarcation Problem in the Engineering Sciences

- Model Development in the Engineering Sciences

Serving the Numerical Simulation community since 1989

Serving the Numerical Simulation community since 1989

Leave a Reply

We appreciate your feedback!

You must be logged in to post a comment.