By Dr. Barna Szabó

Engineering Software Research and Development, Inc.

St. Louis, Missouri USA

Digital transformation, digital twins, certification by analysis, and AI-assisted simulation projects are generating considerable interest in engineering communities. For these initiatives to succeed, the reliability of numerical simulations must be assured. This can happen only if management understands that simulation governance is an essential prerequisite for success and undertakes to establish and enforce quality control standards for all simulation projects.

The idea of simulation governance is so simple that it is self-evident: Management is responsible for the exercise of command and control over all aspects of numerical simulation. The formulation of technical requirements is not at all simple, however. A notable obstacle is the widespread confusion of the practice of finite element modeling with numerical simulation. This misconception is fueled by marketing hyperbole, falsely suggesting that purchasing a suite of software products is equivalent to outsourcing numerical simulation.

At present, a very substantial unrealized potential exists in numerical simulation. Simulation technology has matured to the point where management can realistically expect the reliability of predictions based on numerical simulations to match the reliability of observations in physical experimentation. This will require management to upgrade simulation practices through exercising simulation governance.

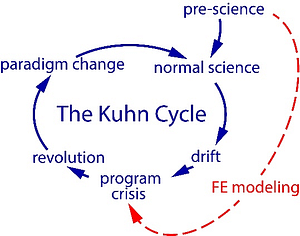

The Kuhn Cycle

The development of numerical simulation technology falls under the broad category of scientific research programs, which encompass model development projects in the engineering and applied sciences as well. By and large, these programs follow the pattern of the Kuhn Cycle [1] illustrated schematically in Fig. 1 in blue:

A period of pre-science is followed by normal science. In this period, researchers have agreed on an explanatory framework (paradigm) that guides the development of their models and algorithms. Program (or model) drift sets in when problems are identified for which solutions cannot be found within the confines of the current paradigm. A program crisis occurs when the drift becomes excessive and attempts to remove the limitations are unsuccessful. Program revolution begins when candidates for a new approach are proposed. This eventually leads to the emergence of a new paradigm, which then becomes the explanatory framework for the new normal science.

The Development of Finite Element Analysis

The development of finite element analysis followed a similar pattern. The period of pre-science began in 1956 and lasted until about 1970. In this period, engineers who were familiar with the matrix methods of structural analysis were trying to extend that method to stress analysis. The formulation of the algorithms was based on intuition; testing was based on trial and error, and arguing from the particular to the general (a logical fallacy) was common.

Normal science began in the early 1970s when the mathematical foundations of finite element analysis were addressed in the applied mathematics community. By that time, the major finite element modeling software products in use today were under development. Those development efforts were largely motivated by the needs of the US space program. The developers adopted a software architecture based on pre-science thinking. I will refer to these products as legacy FE software: For example, NASTRAN, ANSYS, MARC, and Abaqus are all based on the understanding of the finite element method (FEM) that existed before 1970.

Mathematical analysis of the finite element method identified a number of conceptual errors. However, the conceptual framework of mathematical analysis and the language used by mathematicians were foreign to the engineering community, and there was no meaningful interaction between the two communities.

The scientific foundations of finite element analysis were firmly established by 1990, and finite element analysis became a branch of applied mathematics. This means that, for a very large class of problems that includes linear elasticity, the conditions for stability and consistency were established, estimates were obtained for convergence rates, and solution verification procedures were developed, as were elegant algorithms for superconvergent extraction of quantities of interest such as stress intensity factors. I was privileged to have worked closely with Ivo Babuška, an outstanding mathematician who is rightfully credited for many key contributions.

Normal science continues in the mathematical sphere, but it has no influence on the practice of finite element modeling. As indicated in Fig. 1, the practice of finite element modeling is rooted in the pre-science period of finite element analysis, and having bypassed the period of normal science, it had reached the stage of program crisis decades ago.

Evidence of Program Crisis

The knowledge base of the finite element method in the pre-science period was a small fraction of what it is today. The technical differences between finite element modeling and numerical simulation are addressed in one of my earlier blog posts [2]. Here, I note that decision-makers who have to rely on computed information have reasons to be disappointed. For example, the Air Force Chief of Staff, Gen. Norton Schwartz, was quoted in Defense News, 2012 [3] saying: “There was a view that we had advanced to a stage of aircraft design where we could design an airplane that would be near perfect the first time it flew. I think we actually believed that. And I think we’ve demonstrated in a compelling way that that’s foolishness.”

General Schwartz expected that the reliability of predictions based on numerical simulation would be similar to the reliability of observations in physical tests. This expectation was not unreasonable considering that by that time, legacy FE software tools had been under development for more than 40 years. What the general did not know was that, while the user interfaces greatly improved and impressive graphic representations could be produced, the underlying solution methodology was (and still is) based on pre-1970s thinking.

As a result, efforts to integrate finite element modeling with artificial intelligence and to establish digital twins based on finite element modeling will surely end in failure.

Paradigm Change Is Necessary

Paradigm change is never easy. Max Planck observed: “A new scientific truth does not triumph by convincing its opponents and making them see the light, but rather because its opponents eventually die, and a new generation grows up that is familiar with it.” This is often paraphrased, saying: “Progress occurs one funeral at a time.” Planck was referring to the foundational sciences and changing academic minds. The situation is more challenging in the engineering sciences, where practices and procedures are often deeply embedded in established workflows and changing workflows is typically difficult and expensive.

What Should Management Do?

First and foremost, management should understand that simulation is one of the most abused words in the English language. Furthermore:

- Treat any marketing claim involving simulation with an extra dose of skepticism. Prior to undertaking projects in the areas of digital transformation, certification by analysis, digital twins, and AI-assisted simulation, ensure that the mathematical models produce reliable predictions.

- Recognize the difference between finite element modeling and numerical simulation.

- Understand that mathematical models produce reliable predictions only within their domains of calibration.

- Treat model form and numerical approximation errors separately and require error control in the formulation and application of mathematical models.

- Do not accept computed data without error metrics.

- Understand that model development projects are open-ended.

- Establish conditions favorable for the evolutionary development of mathematical models.

- Become familiar with the concepts and terminology in reference [4]. For additional information on simulation governance, I recommend ESRD’s website.

References

[1] Kuhn, T. S., The structure of scientific revolutions. Vol. 962. University of Chicago Press, 1997. [2] Szabó B. Why Finite Element Modeling is Not Numerical Simulation? ESRD Blog. November 2, 2023. https://www.esrd.com/why-finite-element-modeling-is-not-numerical-simulation/. [3] Weisgerber, M. DoD Anticipates Better Price on Next F-35 Batch, Gannett Government Media Corporation, 8 March 2012. [Online]. Available: https://tinyurl.com/282cbwhs. [4] Szabó, B. and Actis, R. The demarcation problem in the applied sciences. Computers and Mathematics with Applications. Vol. 162, pp. 206–214, 2024.Related Blogs:

- Where Do You Get the Courage to Sign the Blueprint?

- A Memo from the 5th Century BC

- Obstacles to Progress

- Why Finite Element Modeling is Not Numerical Simulation?

- XAI Will Force Clear Thinking About the Nature of Mathematical Models

- The Story of the P-version in a Nutshell

- Why Worry About Singularities?

- Questions About Singularities

- A Low-Hanging Fruit: Smart Engineering Simulation Applications

- The Demarcation Problem in the Engineering Sciences

- Model Development in the Engineering Sciences

- Certification by Analysis (CbA) – Are We There Yet?

- Not All Models Are Wrong

- Digital Twins

- Digital Transformation

Serving the Numerical Simulation community since 1989

Serving the Numerical Simulation community since 1989

Leave a Reply

We appreciate your feedback!

You must be logged in to post a comment.