By Dr. Barna Szabó

Engineering Software Research and Development, Inc.

St. Louis, Missouri USA

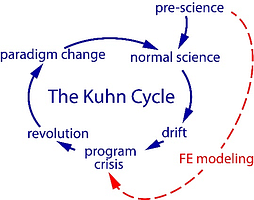

The idea of achieving convergence by increasing the polynomial degree (p) of the approximating functions on a fixed mesh, known as the p-version of the finite element method, was at odds with the prevailing view in the finite element research community in the 1960s and 70s.

The accepted paradigm was that elements should have a fixed polynomial degree, and convergence should be achieved by decreasing the size of the largest element of the mesh, denoted by h. This approach came to be called the h-version of the finite element method. This view greatly influenced the development of the software architecture of legacy finite element software in ways that made it inhospitable for later developments.

The finite element research community rejected the idea of the p-version of the finite element method with nearly perfect unanimity, predicting that “it would never work”. The reasons given are listed below.

Why the “p-version would never work”?

The first objection was that the system of equations would become ill-conditioned at high p-levels. − This problem was solved by proper selection of the basis functions [1].

The second objection was that high-order elements will require excessive computer time. − This problem was solved by proper ordering of the operations. If the task is stated in this way: “Compute (say) the maximum normal stress and verify that the result is accurate to within (say) 5 percent relative error“ then the p-version will require substantially fewer machine cycles than the h-version and virtually no user intervention.

The third objection was that mappings, other than isoparametric and subparametric mappings, fail to represent rigid body displacements exactly. − This is true but unimportant because the errors associated with rigid body modes converge to zero very fast [1].

The fourth objection was that solutions obtained using high-order elements oscillate in the neighborhoods of singular points. – The rate of convergence of the p-version is stronger because the finite element solution oscillates in the neighborhood of singular points and the p-version is very efficient elsewhere [1].

The fifth objection was the hardest one to overcome. There was a theoretical estimate of the error of approximation in energy norm which states:

||\boldsymbol u_{ex} -\boldsymbol u_{fe}||_E \le Ch^{min(\lambda,p)} \quad (1)On the left of this inequality is the error of approximation in energy norm, on the right C is a positive constant, h is the size of the largest element of the mesh, λ is a measure of the regularity of the exact solution, usually a number less than one, and p is the polynomial degree. The argument was that since λ is usually a small number, it does not matter how high p is, it will not affect the convergence rate. This estimate is correct for the h-version, however, because C depends on p, it is not correct for the p-version [2].

The sixth objection was that the p-version is not suitable for solving nonlinear problems. – This objection was answered when the German Research Foundation (DFG) launched a project in 1994 that involved nine university research institutes. The aim was to investigate adaptive finite element methods with reference to problems in the mechanics of solids [3]. The research was led by professors of mathematics and engineering.

As part of this project, the question of whether the p-version can be used for solving nonlinear problems was addressed. The researchers agreed to investigate a two-dimensional nonlinear model problem. The exact solution of the model problem was not known, therefore a highly refined mesh with millions of degrees of freedom was used to obtain a reference solution. This is the “overkill” method. The researchers unanimously agreed at the start of the project that the refinement was sufficient so that the corresponding finite element solution could be used as if it were the exact solution.

Professor Ernst Rank and Dr. Alexander Düster, of the Department of Construction Informatics of the Technical University of Munich, showed that the p-version can achieve significantly better results than the h-version, even when compared with adaptive mesh refinement, and recommended further investigation of complex material models with the p-version [4]. They were also able to show that the reference solution was not accurate enough. With this, the academic debate was decided in favor of the p-version. I attended the concluding conference held at the University in Hannover (now Leibniz University).

Understanding the Finite Element Method

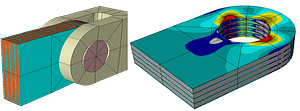

The finite element method is properly understood as a numerical method for the solution of ordinary and partial differential equations cast in a variational form. The error of approximation is controlled by both the finite element mesh and the assignment of polynomial degrees [2].

The separate labels of h- and p-version exist for historical reasons since both the mesh (h) and the assignment of polynomial degrees (p) are important in finite element analysis. Hence, the h- and p-versions should not be seen as competing alternatives, but rather as integral components of an adaptable discretization strategy. Note that a code that has p-version capabilities can always be operated as an h-version code, but not the other way around.

There are other discretization strategies named X-FEM, Isogeometric Analysis, etc. They have advantages for certain classes of problems, but they lack the generality, adaptability, and efficiency of the finite element method implemented with p-version capabilities.

Outlook

Explainable Artificial Intelligence (XAI) will impose the requirements of reliability, traceability, and auditability on numerical simulation. This will lead to the adoption of methods that support solution verification and hierarchic modeling approaches in the engineering sciences.

Artificial intelligence tools will have the capability to produce smart discretizations based on the information content of the problem definition. The p-version, used in conjunction with properly designed meshes, is expected to play a pivotal role in that process.

References

[1] B. Szabό and I. Babuška, Finite Element Analysis. John Wiley & Sons, Inc., 1991. [2] I. Babuška, B. Szabó and I. N. Katz, The p-version of the finite element method. SIAM J. Numer. Anal., Vol. 18, pages 515-545, 1981. [3] E. Ramm, E. Rank, R. Rannacher, K. Schweizerhof, E. Stein, W. Wendland, G. Wittum, P. Wriggers, and W. Wunderlich, Error-controlled Adaptive Finite Elements in Solid Mechanics, edited by E. Stein. John Wiley & Sons Ltd., Chichester 2003. [4] A. Düster and E. Rank, The p-version of the finite element method compared to an adaptive h-version for the deformation theory of plasticity. Computer Methods in Applied Mechanics and Engineering, Vol. 190, pages 1925-1935, 2001.Related Blogs:

- Where Do You Get the Courage to Sign the Blueprint?

- A Memo from the 5th Century BC

- Obstacles to Progress

- Why Finite Element Modeling is Not Numerical Simulation?

- XAI Will Force Clear Thinking About the Nature of Mathematical Models

Serving the Numerical Simulation community since 1989

Serving the Numerical Simulation community since 1989

Leave a Reply

We appreciate your feedback!

You must be logged in to post a comment.