By Dr. Barna Szabó

Engineering Software Research and Development, Inc.

St. Louis, Missouri USA

In Thomas Kuhn’s terminology, “pre-science“ refers to a period of early development in a field of research [1]. During this period, there is no established explanatory framework (paradigm) mature enough to solve the main problems. In the case of the finite element method (FEM), the period of pre-science started when reference [2] was published in 1956 and ended in the early 1970s when scientific investigation began in the applied mathematics community. The publication of lectures at the University of Maryland [3] and the first mathematical book on FEM [4] marked the transition to what Kuhn termed “normal science”.

Two Views

Engineers view FEM as an intuitive modeling tool, whereas mathematicians see it as a method for approximating the solutions of partial differential equations cast in variational form. On the engineering side, the emphasis is on implementation and applications, while mathematicians are concerned with clarifying the conditions for stability and consistency, establishing error estimates, and formulating extraction procedures for various quantities of interest.

From the beginning, a significant communication gap existed between the engineering and mathematical communities. Engineers did not understand why mathematicians would worry so much about the number of square-integrable derivatives, and mathematicians did not understand how it is possible that engineers can find useful solutions even when the rules of variational calculus are violated. This gap widened over the years: On one hand, the art of finite element modeling became an integral part of engineering practice. On the other hand, the science of finite element analysis became an established branch of applied mathematics.

The Art of Finite Element Modeling

The art of finite element modeling has its roots in the pre-science period of finite element analysis when engineers sought to extend the matrix methods of structural analysis, developed for trusses and frames, to complex structures such as plates, shells, and solids. The major finite element modeling software products in use today, such as NASTRAN, ANSYS, MARC, and Abaqus are all based on the understanding of the finite element method (FEM) that existed before 1970. As long as the goal is to find force-displacement relationships, such as in load models of airframes and crash dynamics models of automobiles, finite element modeling can provide useful information. However, problems arise when the quantities of interest include (or depend on) the pointwise derivatives of the solution, as in strength analysis where stresses and strains are of interest.

Misplaced Accusations

The first mathematical book on the finite element method [4] dedicated a chapter to violations of the rules of variational calculus in various implementations of the finite element method. The title of the chapter is “Variational Crimes,” a catchphrase that quickly caught on. The variational crimes are charged as follows:

- Using non-conforming Elements: Non-conforming elements are those that do not satisfy the interelement continuity requirements of the variational formulation.

- Using numerical integration.

- Approximating domains and boundary conditions.

Item 1 is a serious crime, however, the motivations for committing this crime can be negated by properly formulating mathematical models. Items 2 and 3 are not crimes; they are essential features of the finite element method, and the associated errors can be easily controlled. The authors were thinking about asymptotic error estimators (what happens when the diameter of the largest element goes to zero) that did not account for items 2 and 3. They did not want to bother with the complications caused by numerical integration and the approximation of the domains and boundary conditions, so they declared those features to be crimes. This may have been a clever move but certainly not a helpful one.

Sherlock Holmes investigating variational crimes in Victorian London. Image generated by Microsoft Copilot.

Egregious Variational Crimes

The authors of reference [4] failed to mention the truly egregious variational crimes that are very common in the practice of finite element modeling today and will have to be abandoned if the reliability predictions based on finite element computations are to be established:

- Using point constraints. Perhaps the most common variational crime is using point constraints for other than rigid body constraints. The finite element solution will converge to a solution that ignores the point constraints if such a solution exists, else it will diverge. However, the rates of convergence or divergence are typically very slow. For the discretizations used in practice, it is hardly noticeable. So then, why should we worry about it? – Either we are not approximating the solution to the problem we had in mind, or we are “approximating” a problem that has no solution. Finding an approximation to a solution that does not exist makes no sense, yet such occurrences are very common in finite element modeling practice. The apparent credibility of the finite element solution is owed to the near cancellation of two large errors: The conceptual error of using illegal constraints and the numerical error of not using sufficiently fine discretization to make the conceptual error visible. A detailed explanation is available in reference [5], Section 5.2.8.

- Using point forces in 2D and 3D elasticity (or more generally in 2D and 3D problems). In linear elasticity, the exact solution does not have finite strain energy when point forces are applied. Hence, any finite element solution “approximates” a problem that does not have a solution in energy space. Once again, divergence is very slow. When point forces are applied, element-by-element equilibrium is satisfied, and the effects of point forces are local, whereas the effects of point constraints are global. Generally, it is permissible to apply point forces in the region of secondary interest but not in the region of primary interest, where the goal is to compute quantities that depend on the derivatives, such as stresses and strains [5].

- Using reduced integration. At the time of the publication of their book [4], Strang and Fix could not have known about reduced integration which was introduced a few years later [6]. Reduced integration was justified in typical finite element modeling fashion: Low-order elements exhibit shear locking and Poisson ratio locking. Since the elements that lock “are too stiff,” it is possible to make them softer by using fewer than the necessary integration points. The consequences were that the elements exhibited spurious “zero energy modes,” called “hourglassing,” that had to be controlled by various tuning parameters. For example, in the Abaqus Analysis User’s Manual, C3D8RHT(S) is identified as an “8-node trilinear displacement and temperature, reduced integration with hourglass control, hybrid with constant pressure” element. Tinkering with the integration rules may be useful in the art of finite element modeling when the goal is to tune stiffness relationships (as, for example, in crash dynamics models), but it is an egregious crime in finite element analysis because it introduces a source of error that cannot be controlled by mesh refinement, or increasing the polynomial degree, and makes a posteriori error estimation impossible.

- Reporting computed data that do not converge to a finite value. For example, if a domain has one or more sharp reentrant corners in the region of primary interest, then the maximum stress computed from a finite element solution will be a finite number but will tend to infinity when the degrees of freedom are increased. It is not meaningful to report such a computed value: The error is infinitely large.

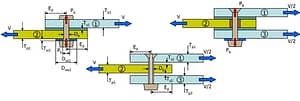

- Tricks used when connecting elements based on different formulations. For example, connecting an axisymmetric shell element (3 degrees of freedom per node) with an axisymmetric solid element (2 degrees of freedom) involves tricks of various sorts, most of which are illegal.

Takeaway

The deeply ingrained practice of finite element modeling has its roots in the pre-science period of the development of the finite element method. To meet the current reliability expectations in numerical simulation, it will be necessary to routinely perform solution verification. This is possible only through the science of finite element analysis, respecting the rules of variational calculus. When thinking about digital transformation, digital twins, certification by analysis, and linking simulation with artificial intelligence tools, one must think about the science of finite element analysis and not the art of finite element modeling rooted in pre-1970s thinking.

References

[1] Kuhn, T. S., The structure of scientific revolutions. Vol. 962. University of Chicago Press, 1997.[2] Turner, M.J., Clough, R.W., Martin, H.C. and Topp, L.J. Stiffness and deflection analysis of complex structures. Journal of the Aeronautical Sciences, 23(9), pp. 805-823, 1956.[3] Babuška, I. and Aziz, A.K. Survey lectures on the mathematical foundations of the finite element method. The mathematical foundations of the finite element method with applications to partial differential equations (A. K. Aziz, ed.) Academic Press, 1972.[4] Strang, G. and Fix, G. An analysis of the finite element method. Prentice Hall, 1973.[5] Szabό, B. and Babuška, I. Finite Element Analysis: Method, Verification and Validation., 2nd ed., Hoboken, NJ: 2nd edition. John Wiley & Sons, Inc., 2021. [6] Hughes, T.J., Cohen, M. and Haroun, M. Reduced and selective integration techniques in the finite element analysis of plates. Nuclear Engineering and Design, 46(1), pp.203-222, 1978.Related Blogs:

- Where Do You Get the Courage to Sign the Blueprint?

- A Memo from the 5th Century BC

- Obstacles to Progress

- Why Finite Element Modeling is Not Numerical Simulation?

- XAI Will Force Clear Thinking About the Nature of Mathematical Models

- The Story of the P-version in a Nutshell

- Why Worry About Singularities?

- Questions About Singularities

- A Low-Hanging Fruit: Smart Engineering Simulation Applications

- The Demarcation Problem in the Engineering Sciences

- Model Development in the Engineering Sciences

- Certification by Analysis (CbA) – Are We There Yet?

- Not All Models Are Wrong

- Digital Twins

- Digital Transformation

- Simulation Governance

Serving the Numerical Simulation community since 1989

Serving the Numerical Simulation community since 1989

Leave a Reply

We appreciate your feedback!

You must be logged in to post a comment.